Publishing our Object Detection Network and Dataset

FRE 2021 is over! It’s been a great week seeing all of the other teams again and competing with one another. We are grateful for the opportunity we had in building the competition environment and shaping this year’s Field Robot Event!

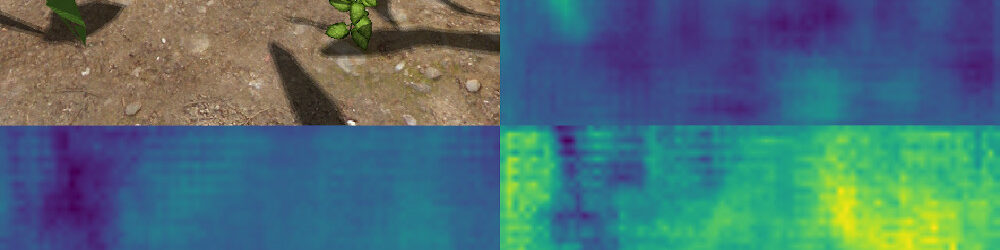

We were especially pleased with the organizers‘ decision to include realistic 3D plant models for the weed detection task. For a long time, simple color-based detectors were sufficient to solve the detection tasks at the Field Robot Event. However, such approaches are not adequate to perform a plant recognition task in a realistic scenario. On the real field, robots will have to distinguish between multiple species of plants — all of which are green — in order to perform weeding, phenotyping and other tasks. Deep learning has been around for a few years now and seems to be the most applicable tool to tackle this challenge.

In the conversations we have had throughout the week with other teams and the jury, we noticed that while some teams have already embraced the shift towards deep learning, others are still sceptical of the benefits of this technology, or are afraid of the burden and cost of implementing such an approach.

In order to facilitate the use of deep learning based object recognition, and to improve cooperation and exchange between teams, we are open-sourcing the deep learning based weed recognition software that we used at this year’s online competition (FRE 2021). We are also publishing our dataset and complimentary Jupyter notebooks illustrating the training process, in order to help interested people to get started.

You will find everything you need at the following links:

- GitHub: https://github.com/Kamaro-Engineering/fre21_object_detection

- Model (ONNX format): https://nextcloud.kamaro-engineering.de/s/j2FXbdBSsGS69jt

- Dataset: https://nextcloud.kamaro-engineering.de/s/kJpxCYNzA877pSy

Our implementation is based on PyTorch, and makes use of Python3 and ROS Noetic. A CUDA-capable GPU with at least 8 GB of memory is recommended if you want to train your own models. For inference (i.e. running images through the detector on a robot or in a simulation), we export the model to the ONNX format and execute it on the CPU. Thus, no GPU is required on the robot.

We encourage you to have a look at our Jupyter notebooks, maybe download our dataset, and try to tweak parameters, train new models (e.g. by making use of the excellent selection of pretrained models readily available in PyTorch).

If the pandemic allows, there will hopefully be an offline competition next year. For that, a new dataset will be needed, as this year’s dataset consists entirely of Gazebo screenshots. If you would be interested in collaborating on creating (realistic) datasets for next year’s competition, we would be happy to work with you. Feel free to reach out to us via email! Collecting and annotating data is cumbersome, but easily parallelizable if more people participate. Collaboration will also increase diversity within the dataset, which in turn will make any models trained on it more robust.

We strongly believe that deep learning is necessary in order to perform realistic, state-of-the-art image recognition in the Field Robot Event. Work done in this field should take place in the open, so that no teams are excluded due to a lack of pre-existing knowledge. If you have any questions regarding our software, or just want to chat about Field Robots and/or Deep Learning, drop us an email and we can meet up online some time!

This work has been done by Johannes Barthel, Thomas Friedel, Leon Tuschla and Johannes Bier.

Kamaro

Kamaro

Keine Kommentare